As artificial intelligence continues to reshape industries worldwide, universities are increasingly exploring its potential in education. At Qatar Foundation (QF), AI is being integrated into various academic fields, including university classrooms, where it is revolutionising both teaching and learning experiences.

One of the leading voices in this transformation is Professor Uday Chandra of Georgetown University in Qatar (GU-Q). In his course, Politics of AI, students critically engage with the societal and political implications of AI while actively using AI tools to enhance their academic work. Unlike many faculty members who remain hesitant or resistant to AI in education, Chandra is embracing challenging students to navigate its benefits and limitations firsthand.

In an exclusive interview with Gulf Times, Chandra sees it as an opportunity to rethink traditional learning methods. In his class, AI is used to help students structure their arguments, refine their writing, and develop creative approaches to assignments. Through guided experimentation, students learn not only how AI can enhance their academic work but also how to critically assess its limitations and ethical considerations.

Chandra explained his point of view regarding the future of AI in higher education to Gulf Times:

AI as a tool for learning

Q: How do you envision AI transforming the educational landscape in the coming years, particularly at Georgetown University in Qatar?

A: AI tools are already being used by students to read and write in college and beyond. From brainstorming for an essay or a presentation to editing one's prose, students use a range of AI platforms and apps to carry out tasks. At work in future, they will need to do the same to boost productivity in an ethical manner.

Sadly, universities, especially faculty and staff, are slow to embrace AI. Proper skilling is needed with annual refresher courses for university employees to understand how campuses can leverage AI tools without compromising academic integrity.

Q: What challenges do you foresee in integrating AI into educational practices, both for faculty and students?

A: The primary challenge, to my mind, is understanding what AI is and how it can help us be more productive. Fundamentally, AI, or human-inspired AI, is an extension of ourselves. We can train tools to help us compose a first draft of an email, for example, or to follow a step-by-step algorithm to accomplish a certain task. Once everyone has a shared understanding of the new technology available to us today, we can better wrap our heads around it.

The micro-challenges of implementing AI policies on a campus are, in my view, secondary. The IT department (or a new AI department/program) may need to offer a series of workshops, some of which must be mandatory from an HR perspective to ensure a basic minimum awareness of what AI is and does.

Faculty should play with these tools to understand how lesson plans, exam questions, assignment design, etc can improve with automation of some work processes. Similarly, students can write better and improve their understanding of what they're being taught by embracing AI. Both faculty and students can also appreciate with training that unethical conduct also leads to the creation of poor quality content. Being ethical and being accurate are closely connected.

Q: What AI tools do you currently recommend for students to use in their assignments, and how do they enhance the learning experience?

A: There are too many to list here, but ChatGPT is by far the most commonly used tool. I also recommend Claude, Google Notebook LM, and Gemini for related but different products. There are also specific tools for generating a preliminary literature review or bibliography. Students pursuing research can be introduced to these tools. Similarly, PowerPoint or Canva now have AI features that can significantly enhance the quality of a power point presentation. Even a Kahoot quiz can be boosted by AI tools: upload a list of questions and AI can generate a bunch of slides with quiz questions in a matter of seconds.

Q: How do you assess the accuracy of AI-generated content in student work, and what warnings do you provide to students about relying on these tools?

A: We need to model best practices in a classroom, whether AI-related or not. We must not be afraid to demonstrate how to use AI tools and platforms in a classroom setting. We should also reconsider assessment or the kinds of assignments we ask students to complete. Students need to see what bad or poor AI output looks like in order to avoid the temptation of a quick fix. We shouldn't overestimate young people's knowledge about AI tools. They are learning too, and universities have a responsibility to ensure that they understand how to use these tools accurately and ethically.

Q: How do you evaluate assignments that incorporate AI? Are there specific criteria you use to ensure academic integrity?

A: Instead of the old style of assignments that get students to repeat what was said in a lecture or textbook, we need to reimagine student assignments. We need to specifically design tasks that require AI use. For example, students can conduct an interview with a policymaker (or migrant worker) and compare it with an AI-generated interview transcript. The learning comes from this comparative exercise and the reflection it gives rise to. This is how humans learn. None of us can learn how to drive or swim by memorising terms and giving canned responses to questions. Then why should we force young people to learn this way? Once we reimagine school and college assignments, the problem of academic integrity will go away on its own. It exists now only as a remnant of an older way of teaching and learning, which, as we know, serves the majority of students poorly.

Q: There is often a stigma surrounding the use of AI in education. How do you address concerns from students and faculty about relying on AI tools?

A: I wouldn't call it stigma. It is mostly ignorance of what AI is and how its power can be harnessed without embarrassing ourselves. This is why I proposed training workshops all year round. It would help to have a dedicated group (a mix of IT, academic professionals, students and faculty) to come together and set campus standards on what is proper AI use (and what isn't). Each campus may have a slightly different approach, but clear communication of standards is essential. Otherwise, academic integrity is impossible because each professor is currently empowered to do as they please. Some colleagues worldwide are going back to blue book exams, which test nothing at all except short-term memory. This kind of panic response smacks of defensiveness: it tells students that their professor is afraid of them and, worse, too lazy to learn something new.

Q: How do you think upcoming generations will perceive the use of AI in education? Will the current stigma persist, or evolve?

A: I think AI is becoming commonplace now in universities and office spaces. It is becoming akin to Google searched or Wikipedia, which were stigmatised early on before becoming essential to our lives at work and beyond. I expect to see the same happening with AI tools. Normalising use is the first step: early adopters may have a headstart, but over time, the curve shifts enough to accommodate the average person in society. All technology use follows the same pattern with early adopters, the majority, and laggards. This is the life cycle of technology adoption, and I don't see AI following a different trajectory.

Q: What skills do educators need to cultivate to effectively integrate AI into their teaching methodologies?

A: First, one needs to start with the basics: what is artificial intelligence, and how is it connected with and different from human intelligence? Thereafter, specific aspects of reading and writing with AI tools can explain how enhanced skill building is possible. AI is, after all, a domain of human creativity. We must experiment after an introduction to the basics. We do not need to become coders to use AI effectively, though it certainly helps to understand coding, algorithms, machine learning, etc.

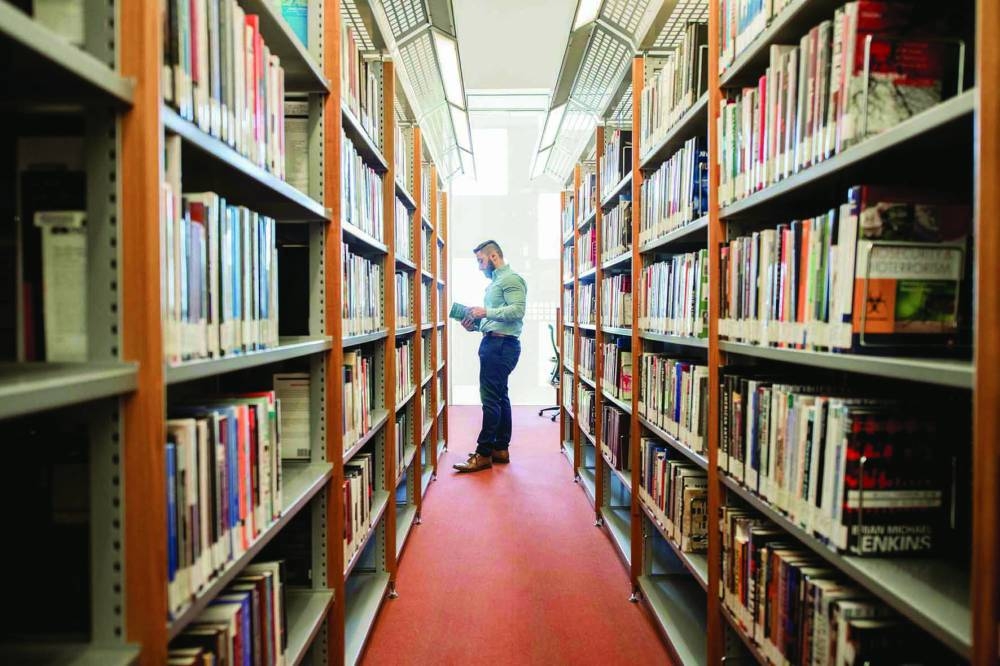

Advanced training workshops by library staff can introduce faculty and students to tools such as Elicit for literature reviews or Claude for making a bibliography. There is more to ChatGPT too. Beyond summarising, detailed reverse outlines with page references are possible. One can craft discussion questions to break down assigned readings more effectively than is possible with human intelligence only. Similarly, writing with AI tools must incorporate a whole gamut of activities from brainstorming to proofreading. AI is a terrific editor: it flags major and minor issues with a text, and raises questions for us to consider in terms of writing choices. Faculty are often unaware that their everyday practices can be enhanced significantly using the new tools available. Once they appreciate what is possible, a realistic sense of expectations from students will also emerge.

Q: Are there any collaborative projects or initiatives at Qatar Foundation that focus on AI in education?

A: Northwestren University and Carnegie Mellon University in Qatar have recently launched Artificial Intelligence Initiative (AI2), a new strategic flagship initiative focused on meeting the global challenges of artificial intelligence and making decisive contributions to research, teaching, and professional development in that area.

Q: In your opinion, what areas related to AI in education should researchers focus on to better understand its impact?

A: I think each campus needs to develop teaching and learning units, who should assist faculty incorporate AI into their classrooms and assignments. They should also assess or evaluate the impact of AI in boosting student learning outcomes. Unless one does this in a careful empirical manner, all our discussions about the future of AI in education will remain at an abstract, philosophical level. We need hard numbers and cold facts in each campus to figure out how to proceed. It is perfectly fine, even desirable, if an engineering campus works out a different modus operandi from, say, GUQ.

Shifting faculty perspectives

With professors like Uday Chandra leading the way, QF is positioning itself at the cutting edge of AI-driven education offering students not just theoretical knowledge but practical insights into the technologies shaping their future.

Education City campus

Georgetown University in Qatar (GU-Q) campus

Georgetown University in Qatar (GU-Q) campus

Professor Uday Chandra of Georgetown University in Qatar (GU-Q)