While the rise of AI could revolutionise numerous sectors and unlock unprecedented economic opportunities, its energy intensity has raised serious environmental concerns. In response, tech companies promote frugal AI practices and support research focused on reducing energy consumption. But this approach falls short of addressing the root causes of the industry’s growing demand for energy.

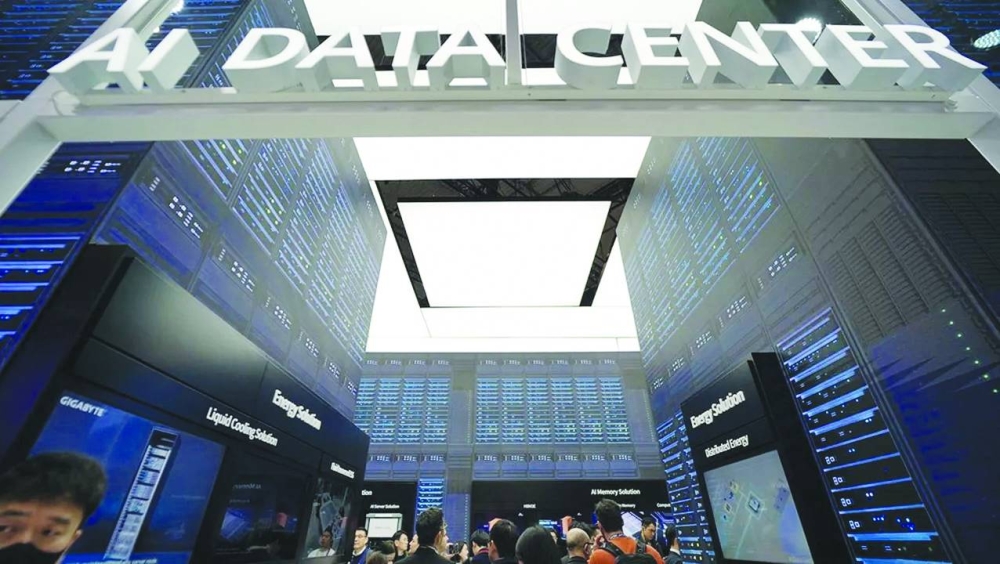

Developing, training, and deploying large language models (LLMs) is an energy-intensive process that requires vast amounts of computational power. With the widespread adoption of AI leading to a surge in data centres’ electricity consumption, the International Energy Agency projects that AI-related energy demand will double by 2026.

Data centres already account for 1-2% of global energy consumption – roughly the same as the entire airline industry. In Ireland, data centres accounted for a whopping 21% of total electricity consumption in 2023. As industries and citizens shift toward electrification to reduce greenhouse-gas emissions, rising AI demand places enormous strain on both power grids and the energy market. Unsurprisingly, Ireland’s grid operator, EirGrid, has imposed a moratorium on new data-centre developments in Dublin until 2028. Countries like Germany, Singapore, and China have also imposed restrictions on new data-centre projects.

To mitigate the environmental impact of emerging technologies, the tech industry has begun to promote the concept of frugal AI, which involves raising awareness of AI’s carbon footprint and encouraging end users – academics and businesses – to select the most energy-efficient model for any given task.

But while efforts to promote more conscious AI use are valuable, focusing solely on users’ behaviour overlooks a critical fact: suppliers are the primary drivers of AI’s energy consumption. Currently, factors like model architecture, data-centre efficiency, and electricity-related emissions have the greatest impact on AI’s carbon footprint. And as technology evolves, individual users will have even less influence on its sustainability, especially as AI models become increasingly embedded within larger applications, making it harder for end users to discern which actions trigger resource-intensive processes.

These challenges are compounded by the rise of agentic AI – independent systems that collaborate to solve complex problems. While experts see this as the next big thing in AI development, such interactions require even more computational power than today’s most advanced LLMs, potentially exacerbating the technology’s environmental impact.

Moreover, shifting the responsibility for reducing AI’s carbon footprint to users is counterproductive, given the industry’s lack of transparency. Most cloud providers do not yet transparently disclose emissions data specifically related to generative AI, making it difficult to assess the environmental impact of their AI use.

A more effective approach would be for AI providers to provide consumers with detailed emissions data. Increased transparency would empower users to make informed decisions while encouraging suppliers to develop more energy-efficient technologies. With access to emissions data, consumers could compare AI applications and select the most energy-efficient model for a specific task. Businesses, too, could more easily choose a traditional IT solution over an energy-intensive generative AI system if the overall impact is clear from the beginning. By working together, AI companies and consumers could balance AI’s potential benefits with its environmental costs.

To be sure, frugal AI may lead to some efficiency gains. But it does not address the core problem of AI’s insatiable energy demand. By providing greater transparency about energy consumption, sharing comprehensive emissions data, and developing standardised metrics for AI models, companies could help clients optimise their carbon budgets and adopt more sustainable practices.

The automotive industry offers a useful model for increasing energy transparency in AI development. By labelling the energy efficiency of their vehicles, auto manufacturers allow buyers to make more sustainable choices. Generative AI providers could adopt a similar approach and establish standardised metrics to capture the environmental impact of their models. One such metric could be electricity consumption per token, which quantifies the amount of energy required for an AI model to process a single unit of text.

Just as fuel-efficiency standards allow car buyers to compare different models and hold manufacturers accountable, businesses and individual users need reliable tools to evaluate the environmental impact of AI models before deploying them. By introducing transparent metrics, technology companies could not only steer the industry toward more sustainable innovation but also ensure that AI helps combat climate change instead of contributing to it. – Project Syndicate

• Boris Ruf is Research Scientist Lead at AXA.

In South Korea, for example, SK Telekom has already deployed a chatbot to 1,000 of its technicians, capable of generating a technical graph to help them resolve a network problem. (AFP/File photo)